Generating evidence about design enabled innovation.

In Designscapes we’re dealing with the take-up and scaling of design enabled innovation in urban environments. By funding dozens of initiatives to develop, pilot, commercialise and potentially scale innovations, we want to both support the uptake of design enabled innovation and also build relevant capacity among citizens, researchers, practitioners, innovators and policymakers.

One of the challenges currently facing those interested in using the method is that evidence on the impact of design enabled innovation is weak. Whilst guidelines, frameworks and toolkits do exist (e.g. the DeEp project has developed a toolkit for evaluating the effects of design policies) actual and published evaluations are scarce (e.g. there is no published work in Europe’s leading professional evaluation journal Evaluation).

Evaluation is therefore an integral part of Designscapes project activities. It will:

- Provide the project with a framework, approach and tools to enable it to capture and understand whether and in what ways it has achieved its objectives;

- Produce outputs (most notably an impact evaluation methodology and indicators) that are transferable beyond the project and can be applied at organisational, regional, national and European levels.

To achieve this, we have developed a comprehensive framework to guide ex ante, process / formative and summative evaluation activities.

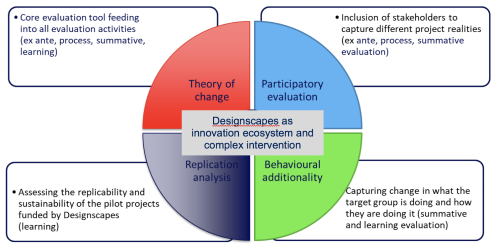

The four building blocks of our approach, which will be accompanied by a toolkit operationalising it, are aligned with both the nature of the Designscapes project and of design enabled innovation tools and processes:

- Theory of change will help us extract and continuously refine the theory of how Designscapes as a project, as well as individual funded pilots, will affect change. Through data collection and participatory exercises we will hone these theories over the coming three and a half years or so to build a contribution story about the impact of design enabled innovation at city level.

- We will involve stakeholders (via participatory evaluation) in the design, elaboration and sense-making of evaluation tools and data to ensure these are fit for purpose, meet needs and bring in the multiplicity of voices that needed to draw the ‘right’ conclusions.

- Rather than counting changes in outputs, our analysis of project results will focus on behavioural additionality – the multiplicity of changes to the behaviours of Designscapes participants as a result of the project’s activities.

- Finally, replication analysis will identify ‘what works’ and the transferable components of the pilots funded by Designscapes.

An important component of the Designscapes evaluation is to support pilots to build their evaluation capacity, by enhancing their understanding of evaluation and its benefits, as well as their ability to data collect and potentially self-evaluate. So we’ll run a number of online and face to face events on different evaluation related topics, coinciding also with the opening of each stage of the call for pilots.

We will share the evaluation approach and thinking behind it at ICE 2018 as part of a number of contributions by the Designscapes project to the event. The conference takes place 17th to 20th in June 2018 in Stuttgart.

Dr Kerstin Junge

Principal Researcher / Consultant

The Tavistock Institute of Human Relations